Introduction to Reinforcement Learning for Economists

Run on Google Colab to test out the code!

The purpose of this post is to introduce some basic concepts from the reinforcement learning (RL) literature using the setting and notation that is familiar to economists studying infinite horizon dynamic decision problems. In particular, I make use of Rust (1987)'s bus engine replacement problem as a demonstrative example.

Note: the methods discussed here are mainly concerned with solving the dynamic problem, and not with estimation.

For those unfamiliar with RL, here are some recent breakthrough examples of these methods

- AlphaGo beating human Go champion

- Playing Atari games by only observing raw image input

- Robotic manipulation of arbitrary objects

What is notable about these applications?

They all involve notable complexity:

- A complex/large state space, as in the case of Go

- Complex transition dynamics, which are analytically intractible, as in the case of Atari games

- Complex policy functions, as is the case with the large number of motors involved in robotic manipulation

The reader should keep these more complex applications in mind. This notebook focuses on a simple case for expositional purposes, with some particular features that RL struggles with. The real power of the latter methods is only apparent in much more complex situations, which I hope to revisit in future posts.

Setup and Notation¶

The methods presented here are appropriate for finite Markov Decision Processes, characterized by the following:

States: $x \in \mathcal{X}$, finite

Actions: $a \in \mathcal{A}(x)$, finite

Transition probabilities: $p(x'|x,a)$

Flow utility: $u(x,a)$

Discount rate: $\beta$

Policy function: $a^*(x) \in \mathcal{A}(x)$

The agent's problem: $$ \begin{align} \max_{a^*} \mathbb{E}_{x_1,x_2,\dots} &\Big[ \sum_{t=0}^\infty \beta^t u(x,a) | x_0 \Big] \\ &s.t. \\ a_t &= a^*(x_t) \\ Pr(x_{t+1}|x_t) &= p(x_{t+1}|x_t,a^*(x_t)) \\ \end{align} $$

We can write the problem in recursive form using the Bellman equation

$$ V(x) = \max_{a \in \mathcal{A}(x)} u(x,a) + \beta \sum_{x' \in \mathcal{X}} V(x') p(x'|x,a) $$import random

import matplotlib.pyplot as plt

import torch

class dynamic_problem:

def __init__(self, nbX, nbA, P, u, β):

self.nbX = nbX

self.nbA = nbA

self.P = P

self.u = u

self.β = β

Bus Engine replacement¶

Recall the problem studied by Rust (1987):

- Bus engines get worn over time and require increasing amounts of maintenance each month.

- Alternatively, the entire engine can be replaced at a high fixed cost

Here, the state variable $x$ represents the mileage of the engine. The actions space at every period is $\mathcal{A} = \{0,1\}$, where $1$ implies replacing the engine.

Utilities are:

- $u(x,0) = -c(x)$

- $u(x,1) = -R - c(0)$

with $c(x) = \theta x$. For the remainder, we fix $\theta = 8.6*10^{-4}$ and $R = 8000$.

The transition process for $x$ is s.t.:

- Conditional on $a_t = 0$, $x_{t+1} - x_t \sim$ Exponential($\lambda$)

- Conditional on $a_t = 1$, $x_{t+1} \sim$ Exponential($\lambda$)

This process will be discretized into a 201-point grid for $x \in [0, 300000]$ with a spacing of $1500$ miles in between points.

nbX = 201

nbA = 2

# Constructing the x_grid and transition probabilities

λ = 1/1500

def exp_cdf(c:float, λ):

return 1 - torch.exp(-λ * c)

x_grid = torch.linspace(0,3*10e4, nbX)

x_diff = torch.maximum(x_grid[:,None] - x_grid[None,:], torch.zeros(1)) # Get all the differences

x_diff = torch.cat([x_diff, torch.ones(1, nbX) * 1e36], 0)

P0 = exp_cdf(x_diff, λ) # Get CDFs at all the interval boundaries

P0 = P0[1:,] - P0[:-1,] # Convert to PMF of the intervals

P1 = P0[:,0][:,None].repeat(1,nbX) # Transition probabilities conditional on replacement is just transition probabilities from the first state. The syntax [:,None] adds an empty dimension at the specified place.

P = torch.stack([P0,P1],-1)

# Utilities

θ = 1e-3

R = 8000

u = lambda x : torch.stack([-θ*x, -torch.tensor(R).repeat(len(x))], -1).squeeze()

# Discount factor

β = 0.97

bus_problem = dynamic_problem(nbX, nbA, P, u(x_grid), β)

bus_problem.x_grid = x_grid

Rust (1987) solution¶

Rust's approach uses the contraction mapping property of the Bellman equation to iterate over choice-specific value functions (which we will call Q-functions).

Let: $$ Q(x,a) \equiv u(x,a) + \beta \sum_{x' \in \mathcal{X}} V(x') p(x'|x,a) $$

Then we have the following contraction mapping: $$ Q(x,a) = u(x,a) + \beta \sum_{x \in \mathcal{X}} p(x'|x,a) \arg \max_{y \in \mathcal{Y}(x)} Q_{x'y} $$

This is almost the contraction mapping from Rust (1987), simply without the random utility component.

This can be iterated on $Q(x,a)$ to solve the problem.

Algorithm¶

Denote the current iteration by $k$. Initialize $k=0$, $Q^0$

For every $k=0,1,\dots$:

- $Q^{k+1}(x,a) = u(x,a) + \beta \sum_{x \in \mathcal{X}} p(x'|x,a) \arg \max_{a \in \mathcal{A}(x)} Q^k(x',a), \quad \forall (x,a)$

- Stop when $|Q^k - Q^{k-1}| < \delta$

Key point: notice that at every iteration $k$, the update is performed over $|\mathcal{X}|\cdot|\mathcal{A}|$ points.

def cmap(self, Q_init, tol, max_iter = float('inf')):

# Initialize

Q_k = Q_init.clone()

tol = 1e-8

k = 0

diff_hist = []

diff = tol + 1

while (diff > tol) & (k < max_iter):

# Compute new Q

Q_k1 = self.u + self.β * torch.sum(self.P * Q_k.max(1)[0][:,None,None],0)

# Record difference

diff = torch.norm(Q_k1 - Q_k)

diff_hist.append(diff)

# Update Q

Q_k = Q_k1

k += 1

diff_hist = torch.stack(diff_hist)

if diff <= tol:

print("Iteration converged after " + "{:,}".format(self.nbX*self.nbA*k) + " function updates")

else:

print("Hit maximum iterations after " + "{:,}".format(self.nbX*self.nbA*k) + " function updates")

return Q_k, diff_hist

dynamic_problem.cmap = cmap

%%time

Q_init = torch.ones(bus_problem.nbX, bus_problem.nbA) * -2000

tol = 1e-8

Q_sol_rust, diff_hist_rust = bus_problem.cmap(Q_init,tol)

Iteration converged after 207,432 function updates CPU times: user 209 ms, sys: 109 ms, total: 318 ms Wall time: 49.5 ms

Computational performance¶

In terms of compute time, the classic method is extremely fast, despite having to perform what seems like a large (>200K) number of updates. Partly this can be attributed to the ease of executing the algorithm in parallel with very little overhead.

# Plots

fig, axs = plt.subplots(2, figsize=(10, 10))

axs[0].plot(x_grid, Q_sol_rust[:,1]-Q_sol_rust[:,0], label = "Contraction mapping")

axs[0].set_xlabel("Miles")

axs[0].set_ylabel("Relative value of replacement")

axs[0].legend()

axs[1].plot(x_grid, Q_sol_rust[:,1]-Q_sol_rust[:,0] >= 0, label = "Contraction mapping")

axs[1].set_xlabel("Miles")

axs[1].set_ylabel("Replacement policy")

axs[1].legend()

<matplotlib.legend.Legend at 0x14031d1b0>

Benchmark solution¶

By leveraging the contraction mapping property of the Bellman equation and by iterating over all $(x,a)$ pairs equally, we can be sure that the solution we have obtained is correct up to our specified error tolerance.

To benchmark the other solutions, we can compare:

- The difference in payoffs between replacing and not replacing as implied by the computed Q-functions (upper plot).

- The resulting policy function or argmax (lower plot).

Q-learning¶

Q-learning, introduced by Watkins (1989) is another way of solving such an MDP.

An intuitive description of Q-learning is "learning by doing". It makes use of simulation to iterate on the equation. This affects both which $(x,a)$ pairs are updated at each iteration and how they are updated.

We can break down this approach into two elements: asynchronous updating and stochastic iteration

Asynchronous (and stochastic) update path¶

Whereas Rust (1987) updates every $(x,a)$ at every iteration, the basic Q-learning iteration only updates a single $(x,a)$ per iteration. The choice of $(x,a)$ is determined by simulation, so $y$ is chosen according to the current policy, while the new $x$ is then chosen according to the transition probabilities.

Algorithm¶

Denote the current iteration by $k$. Initialize $k=0$, $x^0$, $Q^0$

For every $k=0,1,\dots, K$:

- $a^k = \arg \max Q^k_(x^k,a)$

- Draw $x^{k+1}$ according to $p(x^{k+1}|x^k,a)$

- Update:

- If $(x,a) = (x^k,a^k)$: $Q^{k+1}(x,a) = u(x,a) + \beta \sum_{x \in \mathcal{X}} p(x'|x,a) \arg \max_{y \in \mathcal{Y}(x)} Q^k(x',a)$

- Otherwise: $Q^{k+1}_{xy} = Q^k(x,a)$

- Non-standard: check for convergence by keeping a running history of the size of function updates

Key point: note that, in contrast to the previous solution, the update is only performed at one point in $(x,a)$ space at every iteration. In principle, this leads to a more efficient use of information, since the update of one $(x,a)$ pair can immediately be used in the update of another $(x',a')$ pair instead of using 'outdated' information. This is a general feature of asynchronous iterative methods and usually comes at the cost of reduced parallelizability.

1st issue: Exploration¶

Picking $a^k = \arg \max Q^k(x^k,a)$ has the advantage of naturally improving our estimate of $Q$ at the points that occurr most frequently in reality.

However, in the beginning, our guess for $Q$ may be off substantially, and we may fail to pick a $\tilde{a}$ because the current guess for $Q(x,\tilde{a})$ is too low. Since we never pick it, the value can never be updated, and the iteration gets stuck in a suboptimal path.

To fix this, we need to incoporate some form of exploration.

The simplest form of this is to pick $a^k$ according to the $\epsilon$-greedy policy:

- With probability $1-\epsilon$: $a^k = \arg \max Q^k(x^k,a)$

- With probability $\epsilon$: pick $a^k$ randomly

Depending on the transition matrix, some exploration in terms of the state space $\mathcal{X}$ may also be needed. This is apparent in the bus engine replacement problem, since higher-mileages can only be observed after passing through the lower mileages, so estimates at these states can be very noisy. A simple way to accomplish this is by periodically resetting the state variable randomly.

# Asynchronous updating component of Q-learning

@torch.jit.script

def Q_learn0_script(U, P, β:float, nbX:int, nbA:int, Q_init, x_init, eps:float, tol:float, buffer_size:int, max_iter:float = float('inf'), reset_freq:float = float('inf')):

# Initialize

Q_k = Q_init.clone()

x_k = x_init

a_k = Q_k[x_k].argmax()

k = 0

diff_buffer = torch.ones(buffer_size)*tol

while (torch.norm(diff_buffer) > tol) and (k < max_iter):

# epsilon-greedy choice of a

if torch.rand(1) > eps:

a_k = Q_k[x_k].argmax()

else:

a_k = torch.randint(nbA, (1,))[0].long()

# Calculate the temporal difference and add to buffer

TD = U[x_k,a_k] + β * torch.sum(P[:,x_k,a_k] * Q_k.max(1)[0]) - Q_k[x_k,a_k]

diff_buffer[k % buffer_size] = TD

# Update Q

Q_k[x_k,a_k] += TD

# Update x, possibly randomly

if k % reset_freq == 0:

x_k = torch.randint(nbX,(1,))[0].long()

else:

x_k = torch.multinomial(P[:,x_k,a_k],1)[0]

k += 1

return Q_k, diff_buffer, k

def Q_learn0(self, Q_init, x_init, eps:float, tol:float, buffer_size:int, max_iter = float('inf'), reset_freq:float = float('inf')):

Q_k, diff_buffer, k = Q_learn0_script(self.u, self.P, self.β, self.nbX, self.nbA, Q_init, x_init, eps, tol, buffer_size, max_iter, reset_freq)

if torch.norm(diff_buffer) <= tol:

print("Iteration converged after " + "{:,}".format(k) + " function updates")

else:

print("Hit maximum iterations after " + "{:,}".format(k) + " function updates")

return Q_k, diff_buffer

dynamic_problem.Q_learn0 = Q_learn0

# Reasonable training parameters

x_init = torch.tensor(0)

eps = 0.02

tol = 1e-8

buffer_size = bus_problem.nbX*bus_problem.nbA

max_iter = 300000

reset_freq = 20

%time Q_sol_learn0, diff_buffer_learn0 = bus_problem.Q_learn0(Q_init, x_init, eps, tol, buffer_size, max_iter, reset_freq)

Iteration converged after 175,597 function updates CPU times: user 1min, sys: 36.5 s, total: 1min 36s Wall time: 14.1 s

# Plots

axs[0].plot(x_grid, Q_sol_learn0[:,1]-Q_sol_learn0[:,0], label = "Asynchronous updating")

axs[0].legend()

axs[1].plot(x_grid, Q_sol_learn0[:,1]-Q_sol_learn0[:,0] > 0, label = "Asynchronous updating")

axs[1].legend()

fig

Solution comparison¶

Comparing the solution with the benchmark, we see that the solution generally matches very well, except for a few points. This demonstrates the stochastic nature of the update process and the consequent difficulty of verifying convergence.

Furthermore, notice that the total number of function updates is comparable to the synchronous and parallel update method, while being much slower due to lack of parallelization and general overhead from executing a loop.

The lackluster improvement in terms of the number of function updates has partly to do with the nature of the problem, which results in heavily skewed sampling of the $(x,a)$ space.

# Randomly pick a state every time

%time _ = bus_problem.Q_learn0(Q_init, x_init, 0.05, tol, buffer_size, max_iter, reset_freq = 1);

Iteration converged after 112,803 function updates CPU times: user 21.3 s, sys: 11.3 s, total: 32.6 s Wall time: 4.42 s

Here, we re-run the algorithm but set the reset frequency to 1, such that the state $x$ is randomized at every iteration, yields a much stronger improvement in the number of function updates required.

Stochastic iteration¶

Now reconsider the term: $p(x'|x,a) \arg \max_{y \in \mathcal{Y}(x)} Q^k_{x'y}$. In some cases, this can be very expensive to compute, in particular when the transition probabilities $p(x'|x,a)$ are intractable or take on a complex form.

Recall that we are already drawing the next state $x^{k+1}$ from $p(x'|x,a)$ anyways. We can use this to get a very noisy estimate of the above expectation, and use averaging over many periods to smooth out the noise, sing the same principle as stochastic optimization.

Essentially, when we visit $(x^k,a^k)$ in iteration $k$ and get a draw of the following state $x^{k+1}$, this gives a noisy estimate of $Q(x^k,a^k)$:

$$\tilde{Q}(x^k,a^k) = u(x^k,a^k) + \beta \arg \max_{a'} Q^k(x^{k+1},a')$$Because this estimate is noisy, we want to average it over multiple visits of $(x,a)$, so we do not fully update $Q$ to the new value.

Algorithm¶

Initialize $k=0$, $x^0$, $Q^0$.

For every $k=0,1,\dots$:

- $a^k = \arg \max Q^k(x^k,a)$

- Draw $x^{k+1}$ according to $p(x'|x^k,a^k)$

- Get the target value: $\tilde{Q}(x^k,a^k) = u(x^k,a^k) + \beta \arg \max_{a'} Q^k(x^{k+1},a')$

- Update:

- $Q^{k+1}(x,a) = Q^{k}(x,a) + \alpha^k (\tilde{Q}(x^k,a^k) - Q^k(x,a))$ if $(x,a) = (x^k,a^k)$

- $Q^{k+1}(x,a) = Q^k(x,a)$ otherwise

Key point: note that the updates do not require integrating over the future possible states $x'$. In this example with a pre-computed Markov transition matrix and a small state space, this provides little to no advantage. However, in situations with a very large state space and expensive to compute transition probabilities, this can be a significant advantage.

2nd issue: Convergence¶

With the new form of updating, we no longer have the convergence guarantees of the contraction mapping.

Instead, $Q^k$ will behave more like a random variable. If $\alpha^k$ is held fixed, it will never completely converge, only bounce around in the neighborhood of the true $Q$.

However, there are conditions on the sequence of $\alpha^k$ which should guarantee convergence:

- $\sum_{k=0}^\infty \alpha^k = \infty$

- $\sum_{k=0}^\infty \alpha^k < \infty$

Sutton and Barto (2020), p.33

In practice, these conditions are not strictly observed, particularly because they can lead to very slow convergence rates. However, for econometric applications, guaranteed convergence may be more important.

# Full Q-learning

@torch.jit.script

def Q_learn1_script(U, P, β:float, nbX:int, nbA:int, Q_init, x_init, α_k:float, eps:float, tol:float, buffer_size:int, max_iter:float = float('inf'), reset_freq:float = float('inf')):

# Initialize

Q_k = Q_init.clone()

x_k = x_init

a_k = Q_k[x_k].argmax()

k = 0

diff_buffer = torch.ones(buffer_size)*tol + 1

while (torch.abs(torch.mean(diff_buffer)) > tol) and (k < max_iter):

# epsilon-greedy choice of y

if torch.rand(1) > eps:

a_k = Q_k[x_k].argmax()

else:

a_k = torch.randint(nbA, (1,))[0].long()

# draw next x

x_k1 = torch.multinomial(P[:,x_k,a_k],1)[0]

# Calculate the temporal difference and add to buffer

TD = U[x_k,a_k] + β * Q_k[x_k1].max() - Q_k[x_k,a_k]

diff_buffer[k % buffer_size] = TD

# Update Q

Q_k[x_k,a_k] += α_k * TD

# Update x, possibly randomly

if k % reset_freq == 0:

x_k = torch.randint(nbX,(1,))[0].long()

else:

x_k = x_k1

k += 1

return Q_k, diff_buffer, k

def Q_learn1(self, Q_init, x_init, α_k:float, eps:float, tol:float, buffer_size:int, max_iter = float('inf'), reset_freq:float = float('inf')):

Q_k, diff_buffer, k = Q_learn1_script(self.u, self.P, self.β, self.nbX, self.nbA, Q_init, x_init, α_k, eps, tol, buffer_size, max_iter, reset_freq)

if torch.norm(diff_buffer) <= tol:

print("Iteration converged after " + "{:,}".format(k) + " function updates")

else:

print("Hit maximum iterations after " + "{:,}".format(k) + " function updates")

return Q_k, diff_buffer

dynamic_problem.Q_learn1 = Q_learn1

x_init = torch.tensor(0)

α_k = 0.1

eps = 0.04

tol = 1e-8

buffer_size = bus_problem.nbX*bus_problem.nbA

max_iter = 1000000

reset_freq = 20

%time Q_sol_learn1, diff_buffer_learn1 = bus_problem.Q_learn1(Q_init, x_init, α_k, eps, tol, buffer_size, max_iter, reset_freq)

Hit maximum iterations after 852,508 function updates CPU times: user 44.2 s, sys: 566 ms, total: 44.7 s Wall time: 43.8 s

# Plot

axs[0].lines.pop()

axs[0].plot(x_grid, Q_sol_learn1[:,1]-Q_sol_learn1[:,0], label = "Q-learning")

axs[0].legend()

axs[1].lines.pop()

axs[1].plot(x_grid, Q_sol_learn1[:,1]-Q_sol_learn1[:,0] > 0, label = "Q-learning")

axs[1].legend()

fig

Solution comparison¶

We can see here that the computed Q-functions approximate the benchmark fairly closely, though the noise due to stochastic iteration is apparent, even leading to an incorrect policy function in some states.

However, one should also note that this was accomplished with roughly 5 times as many updates, even though we were previously integrating over a state space of $|\mathcal{X}| = 201$. In cases where the computation of transition probabilities is the main computational burden, this represents a speedup factor of roughly 40!

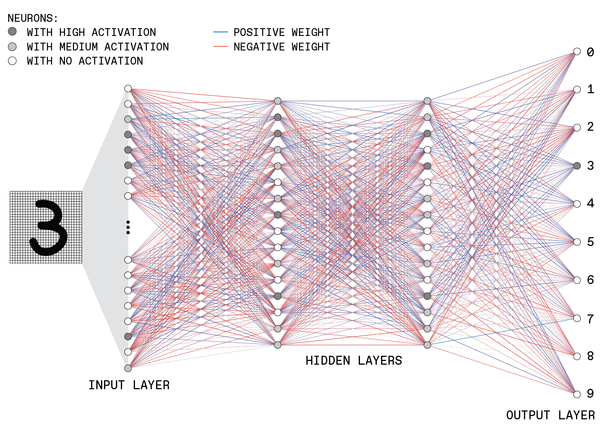

Deep Q-learning¶

So far, the algorithms presented use a large table to represent the Q-values (they are all tabular algorithms). In cases with large state and actions spaces, this implies that (a) a large table is needed to store the values (poor scaling in space complexity) and (b) a large number of updates is needed to reach convergence everywhere.

The recent breakthroughs linked at the beginning of this notebook were only made possible by combining Q-learning with state of the art deep neural networks, which can represent the Q-values much more sparsely and are generally flexible enough to approximate whatever underlying structure the Q-values have.

In general, any universal function approximator will work, as we typically expect the Q-values to be smooth enough in $(x,a)$ such that neighboring pairs will have similar Q-values. Using a function approximator will then also speed up convergence, since a single update can affect a larger region of the $(x,a)$ space.

Finally, the use of such an approximation also allows one to dispense with the discretization of a continuous state space.

In the following, let $Q^\theta$ denote an approximation to $Q$ parametrized by $\theta$.

Algorithm¶

Initialize $k=0$, $x^0$, $\theta^0$.

For every $k=0,1,\dots$:

- $a^k = \arg \max Q^{\theta^k}(x^k,a)$

- Draw $x^{k+1}$ according to $p(x'|x^k,a^k)$

- Get the target value: $\tilde{Q}^k = u(x^k,a^k) + \beta \arg \max_{a'} Q^{\theta^k}(x^{k+1},a') $

- Update:

- $\theta^{k+1} = \theta^k + \alpha^k \nabla_\theta \big( \tilde{Q}^k - Q^{\theta^k}(x^k,a^k) \big)$

Notice: the update step corresponds to gradient descent of the problem: $\arg \min_\theta \big(\tilde{Q}^k - Q^{\theta^k}_{x^ka^k}\big)^2 $

# Defining the neural network structure.

from torch import nn

import torch.nn.functional as F

from torch import optim

class Fork_Net(nn.Module):

def __init__(self, L1_size, L2_size, rescale_factor):

super(Fork_Net, self).__init__()

self.fc11 = nn.Linear(1, L1_size)

self.fc12 = nn.Linear(L1_size, L2_size)

self.fc13 = nn.Linear(L2_size, 1)

self.fc21 = nn.Linear(1, L1_size)

self.fc22 = nn.Linear(L1_size, L2_size)

self.fc23 = nn.Linear(L2_size, 1)

self.rescale_factor = rescale_factor

def forward(self, X):

x1 = F.relu(self.fc11(X*self.rescale_factor))

x1 = F.relu(self.fc12(x1))

x1 = self.fc13(x1)

x2 = F.relu(self.fc21(X*self.rescale_factor))

x2 = F.relu(self.fc22(x2))

x2 = self.fc23(x2)

return torch.cat([x1,x2], -1)

def deep_Q_learn(self, Q_net, x_init, α_k:float, eps:float, tol:float, buffer_size:int, max_iter:float = float('inf'), reset_freq:float = float('inf'), batch_size = 1):

# Initialize

opt_Q = optim.Adam(Q_net.parameters(), lr=α_k)

x_k = torch.tensor([x_init])

a_k = Q_net(self.x_grid[x_k]).argmax()

k = 0

diff_buffer = torch.ones(buffer_size)*tol + 1

while (torch.mean(diff_buffer) > tol) and (k < max_iter):

obj = 0

for j in range(batch_size):

# epsilon-greedy choice of y

if random.random() > eps:

a_k = Q_net(self.x_grid[x_k]).argmax()

else:

a_k = random.randint(0,1)

# draw next x

x_k1 = torch.multinomial(self.P[:,x_k[0],a_k],1)

# Calculate the temporal difference and add to objective

TD = self.u[x_k, a_k] + self.β * Q_net(self.x_grid[x_k1]).max().detach() - Q_net(self.x_grid[x_k])[a_k] # detach is used so that the gradient is not computed wrt to this term

obj += (TD**2)/2

# Update x, possibly randomly

if (j + k*batch_size) % reset_freq == 0:

# x_k = self.x_grid[random.randint(0,self.nbX-1)].unsqueeze(0) * 1.5

x_k = torch.tensor([random.randint(0,self.nbX-1)])

else:

x_k = x_k1

diff_buffer[k % buffer_size] = obj[0]/batch_size

# Update network parameters

opt_Q.zero_grad()

(obj/batch_size).backward()

opt_Q.step()

k += 1

if torch.norm(diff_buffer) <= tol:

print("Iteration converged after " + "{:,}".format(k*batch_size) + " function updates")

else:

print("Hit maximum iterations after " + "{:,}".format(k*batch_size) + " function updates")

return Q_net, diff_buffer

dynamic_problem.deep_Q_learn = deep_Q_learn

Initial parameters¶

The parameters are initialized by the pytorch library. For context, here are the Q-functions given by the initial neural network.

# Initialized network

Q_net = Fork_Net(16,16,1/3e5)

plt.plot(Q_net(x_grid[:,None]).detach())

[<matplotlib.lines.Line2D at 0x140551c60>, <matplotlib.lines.Line2D at 0x140551c00>]

x_init = torch.tensor(0)

α_k = 5e-2

eps = 0.03

tol = 1e-8

buffer_size = bus_problem.nbX*bus_problem.nbA

max_iter = 1500

reset_freq = 20

batch_size = 20

%time Q_net, diff_buffer_deep = bus_problem.deep_Q_learn(Q_net, x_init, α_k, eps, tol, buffer_size, max_iter, reset_freq, batch_size)

Hit maximum iterations after 30,000 function updates CPU times: user 8.41 s, sys: 157 ms, total: 8.56 s Wall time: 8.28 s

Q_sol_deep = Q_net(x_grid[:,None]).detach()

# Plot

axs[0].lines.pop()

axs[0].plot(x_grid, Q_sol_deep[:,1]-Q_sol_deep[:,0], label = "Deep Q-learning")

axs[0].legend()

axs[1].lines.pop()

axs[1].plot(x_grid, Q_sol_deep[:,1]-Q_sol_deep[:,0]>0, label = "Deep Q-learning")

axs[1].legend()

fig

Solution comparison¶

We can immediately see that the approximation excessively smooths out the value functions. In principle this can be remedied with a larger number of iterations and smaller step-sizes, at the cost of increased computing time. This essentially echoes the bias-variance trade-off that is found throughout machine learning and non-parametric estimation.

However, note that even with a clear bias in the value functions, the resulting policy function is not far off, with replacement occurring a bit earlier. This demonstrates that large biases in the value functions need not translate into the same level of biases in the policy functions.

Finally, note that this solution was obtained after only 30,000 function updates. When compared to the tabular Q-learning solution obtained after 1 million iterations, this represents a speedup factor of 30!

Conclusion¶

The objective of this notebook was to give a brief but concrete introduction to some of the methods used in the modern reinforcement learning literature.

The key advantages are:

- Less iterations required to reach an adequate solution

- Far less computations of transition probabilities

- Can be used with a simulation model without analytical transition probabilities

- Naturally combines well with function approximation to deal with large state-action spaces

The key disadvantages are:

- Convergence is no longer guaranteed and can be difficult to verify

- Parallelization is possible but less trivial

- Function approximation introduces bias

As a reminder, the example problem here is used for its familiarity to economists, but certainly does a poor job of showing the advantage of reinforcement learning techniques. In future notebooks, I hope to provide other examples that better showcase the power of these tools.

References¶

Setup and notation:

- Rust, J. (1987). Optimal Replacement of GMC Bus Engines: An Empirical Model of Harold Zurcher. Econometrica, 55(5), 999-1033.

Papers in economics:

- Iskhakov, F., Rust, J., & Schjerning, B. (2020). Machine learning and structural econometrics: contrasts and synergies. The Econometrics Journal, 23(3), S81-S124.

- Igami, M. (2020). Artificial intelligence as structural estimation: Deep Blue, Bonanza, and AlphaGo. The Econometrics Journal, 23(3), S1-S24.

Reference textbook:

Web resources: